In this Python tutorial, we will learn about the PyTorch Model Eval in Python and we will also cover different examples related to the evaluate models. Additionally, we will cover these topics.

- PyTorch model eval

- PyTorch model eval train

- PyTorch model evaluation

- PyTorch model eval vs train

- PyTorch model eval vs no_grad

- PyTorch model eval dropout

- PyTorch model.eval batchnorm

- PyTorch model eval requires_grad

PyTorch Model Eval

In this section, we will learn about how to evaluate the PyTorch model in python.

- The eval() is type of switch for a particular parts of model which act differently during training and evaluating time.

- It sets the model in evaluation mode and the normalization layer use running statistics.

Code:

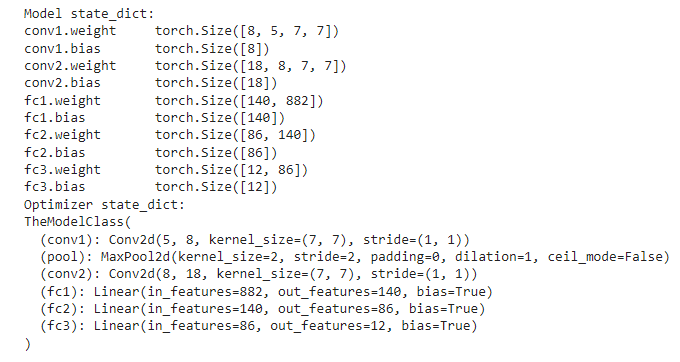

In the following code, we will import some libraries from which we can evaluate the model.

- nn.Conv2d() is used to create convolution kernel.

- nn.MaxPool2d() is used to apply over an input signal composed of several input planes.

- nn.Linear() is used to create feed-forward networks.

- model = TheModelClass() is used to initialize the model.

- optimizers = optimize.SGD(model.parameters(), lr=0.001, momentum=0.8) is used to initialize the optimizer.

- print(“Model state_dict:”) is used to print model state_sict.

- print(“Optimizer state_dict:”) is used to print the optimizer state_dict.

- torch.save(model.state_dict(), ‘model_weights.pth’) is used to save the model.

- model.load_state_dict(torch.load(‘model_weights.pth’)) is used to load the model.

- model.eval() is used to evaluate the model.

import torch

from torch import nn

import torch.optim as optimize

import torch.nn.functional as f

# Define model

class TheModelClass(nn.Module):

def __init__(self):

super(TheModelClass, self).__init__()

self.conv1 = nn.Conv2d(5, 8, 7)

self.pool = nn.MaxPool2d(2, 2)

self.conv2 = nn.Conv2d(8, 18, 7)

self.fc1 = nn.Linear(18 * 7 * 7, 140)

self.fc2 = nn.Linear(140, 86)

self.fc3 = nn.Linear(86, 12)

def forward(self, X):

X = self.pool(f.relu(self.conv1(X)))

X = self.pool(f.relu(self.conv2(X)))

X = x.view(-1, 16 * 5 * 5)

X = f.relu(self.fc1(X))

X = f.relu(self.fc2(X))

X = self.fc3(X)

return X

model = TheModelClass()

optimizers = optimize.SGD(model.parameters(), lr=0.001, momentum=0.8)

print("Model state_dict:")

for param_tensor in model.state_dict():

print(param_tensor, "\t", model.state_dict()[param_tensor].size())

print("Optimizer state_dict:")

torch.save(model.state_dict(), 'model_weights.pth')

model.load_state_dict(torch.load('model_weights.pth'))

model.eval()Output:

After running the above code, we get the following output in which we can see that the model data is printing on the screen after evaluation.

Read: PyTorch Save Model

PyTorch Model Eval Train

In this section, we will learn about the PyTorch model eval train in python.

- PyTorch model eval train is defined as a process to evaluate the train data. The eval() function is used to evaluate the train model.

- The eval() is type of switch for a particular parts of model which act differently during training and evaluating time.

Code:

In the following code, we will import some modules from which we can evaluate the trained model.

- netout = model() is used to initialize the model.

- print(netout) is used to print the model.

- optimizer = optimize.SGD(netout.parameters(), lr=0.001, momentum=0.9) is used to initialize the optimizer.

- torch.save() is used to save the model.

- model.load_state_dict(checkpoint[‘model_state_dict’]) is used to load the model.

- optimizer.load_state_dict(checkpoint[‘optimizer_state_dict’]) is used to load the optimizer.

- model.eval() is used to evaluate the model.

import torch

import torch.nn as nn

import torch.optim as optim

import torch.nn.functional as fun

class net(nn.Module):

def __init__(self):

super(net, self).__init__()

self.conv = nn.Conv2d(5, 8, 7)

self.pool = nn.MaxPool2d(2, 2)

self.conv1 = nn.Conv2d(8, 18, 7)

self.fc = nn.Linear(18 * 5 * 5, 140)

self.fc1 = nn.Linear(140, 86)

self.fc2 = nn.Linear(86, 12)

def forward(self, y):

y = self.pool(fun.relu(self.conv1(Y)))

y = self.pool(fun.relu(self.conv2(y)))

y = y.view(-1, 16 * 5 * 5)

y = fun.relu(self.fc1(y))

y = fun.relu(self.fc2(y))

y = self.fc3(y)

return y

netout = net()

print(netout)

optimizers = optim.SGD(netout.parameters(), lr=0.001, momentum=0.9)

# Additional information

epoch = 7

path = "model.pt"

loss = 0.6

torch.save({

'epoch': epoch,

'model_state_dict': netout.state_dict(),

'optimizer_state_dict': optimizers.state_dict(),

'loss': loss,

}, path)

models = net()

optimizers = optim.SGD(netout.parameters(), lr=0.001, momentum=0.9)

CheckPoint = torch.load(path)

models.load_state_dict(CheckPoint['model_state_dict'])

optimizers.load_state_dict(CheckPoint['optimizer_state_dict'])

Epoch = CheckPoint['epoch']

Loss = CheckPoint['loss']

models.eval()

models.train()Output:

In the following output, in which we can see that the trained model is evaluated on the screen.

Read: Cross Entropy Loss PyTorch

PyTorch Model Evaluation

In this section, we will learn about the evaluation of the PyTorch model in python.

Model evaluation is defined as a process that can use different evaluation matrices for understanding the model.

Code:

In the following code, we will import the torch module from which we can evaluate the model.

- torch.nn.Linear() is used to create the feed-forward network.

- X = torch.randn(N, D_in) is used to create a random tensor to hold inputs and outputs.

- loss_func = torch.nn.MSELoss(reduction=’sum’) is used to construct the loss function.

- optimizer = torch.optim.SGD(model.parameters(), lr=1e-4) is used to initialize the optimizer.

- loss = loss_func(Ypred, Y) is used to print the loss.

- optimizer.zero_grad() is used to optimize the zero gradient.

import torch

class Net(torch.nn.Module):

def __init__(self, Dinp, hidd, Doutp):

super(Net, self).__init__()

self.linear1 = torch.nn.Linear(Dinp, hidd)

self.linear2 = torch.nn.Linear(hidd, Doutp)

def forward(self, X):

h_relu = self.linear1(X).clamp(min=0)

ypred = self.linear2(h_relu)

return ypred

bsize, Dinp, hidd, Doutp = 66, 1001, 101, 11

X = torch.randn(bsize, Dinp)

Y = torch.randn(bsize, Doutp)

modl = Net(Dinp, hidd, Doutp)

loss_func = torch.nn.MSELoss(reduction='sum')

opt = torch.optim.SGD(modl.parameters(), lr=1e-4)

for t in range(500):

Ypred = modl(X)

losses = loss_func(Ypred, Y)

print(t, losses.item())

opt.zero_grad()

losses.backward()

opt.step()

modl.eval()Output:

After running the above code, we get the following output in which we can see that the model evaluation can be done.

Read: Adam optimizer PyTorch with Examples

PyTorch model eval vs train

In this section, we will learn about the PyTorch eval vs train model in python.

- The train() set tells our model that it is currently in the training stage and they keep some layers like dropout and batch normalization which act differently but depend upon the current state.

- The eval() set act totally different to the train set when the eval() is called then our model deactivates all the layers.

Code:

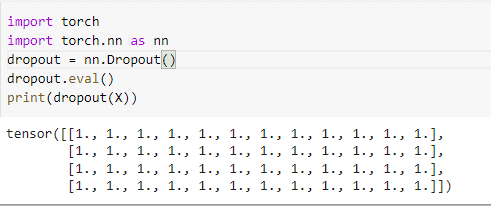

In the following code, we will import some libraries from which we can see the difference between eval and train sets.

- dropout = nn.Dropout() is used to remove units in neural network.

- X = torch.ones(2, 12) is used to initialize the tensor.

- dropout.train() is used as a train mode.

- dropout.eval() is used as a eval mode.

import torch

import torch.nn as nn

dropout = nn.Dropout()

X = torch.ones(2, 12)

# Train mode

dropout.train()

print(dropout(X))

# Eval mode

dropout.eval()

print(dropout(X))Output:

After running the above code, we get the following output in which we can see that the training value and eval value are printed on the screen.

Read: PyTorch nn linear + Examples

PyTorch model eval vs no_grad

In this section, we will learn about the PyTorch model eval vs no_grad in python.

The eval() set tells all the layers that you are in eval mode. The dropout and batch norm layers work in eval mode instead of train mode.

Syntax:

The following syntax is of eval:

model.eval()The no_grad() affects the autograd engine and deactivates it and also reduces memory and speeds up computation.

Syntax:

The syntax is of no_grad:

torch.no_grad()Also, read: PyTorch Load Model + Examples

PyTorch model eval dropout

In this section, we will learn about how the PyTorch model eval dropout works in python.

The eval dropout is defined as a process in which dropout is deactivated and just passed its input.

Code:

In the following code, we will import the torch module from which we implement the eval dropout model.

- nn.Dropout() is used to define the probability of the neuron being deactivated.

- dropout.eval() is used to evaluate the model.

- print(dropout(X)) is used to print the value on the screen.

import torch

import torch.nn as nn

dropout = nn.Dropout()

dropout.eval()

print(dropout(X))Output:

In the following output, we can see that the PyTorch model eval dropout value is printed on the screen.

Read: PyTorch Batch Normalization

PyTorch model.eval batchnorm

In this section, we will learn about the PyTorch model.eval batchnorm in python.

Before moving forward we should have a piece of knowledge about the batchnorm.

Batchnorm is defined as a process that is used for training the deep neural networks which normalize the input to the layer for all the mini-batch.

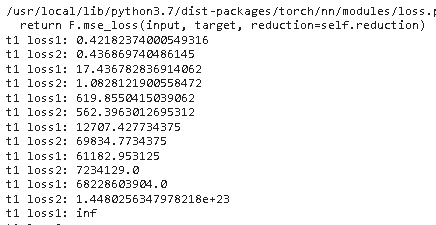

Code:

In the following code, we will import some libraries from which we can evaluate the batchnorm.

- wid = 64 is used as a width.

- heig = 64 is used as a height.

- batch_one = torch.ones([4, 1, 64, 64], dtype=torch.float, device=torch.device(“cuda”)) is used to calculate the batch.

- output_one = torch.tensor([.5, .5]).to(torch.device(“cuda”)) is used to get the output of the batch.

- optimizers = optim.SGD(nets.parameters(), 0.0001) is used to initialize the optimizer.

- optimizers.zero_grad() is used to zero the parameter gradients.

- loss = criterions(netout, outp) is used to calculate the loss.

- print(“t1 loss1:”, train_model(networks, batch_one, output_one)) is used to print the train model.

- print(“v1 loss1:”, batch_evaluate(networks, batch_one, output_one, True)) is used to print the evaluate batch.

import torch

import torch.nn as nn

import torch.optim as optim

class net(nn.Module):

def __init__(self, image_size_total):

super(net, self).__init__()

self.conv = nn.Conv2d(1, 64, 3, padding=1)

self.relu = nn.ReLU(inplace=True)

self.bn = nn.BatchNorm2d(64)

self.max_pool = nn.MaxPool2d(2)

self.fc = nn.Linear((image_size_total//4) * 64, 2)

def forward(self, X):

X = self.conv(X)

X = self.relu(X)

X = self.bn(X)

X = self.max_pool(X)

X = X.view(-1, self.numfeatures(X))

X = self.fc(X)

return X

def numfeatures(self, X):

Size = X.size()[1:] # all dimensions except the batch dimension

numfeatures = 1

for i in Size:

numfeatures *= i

return numfeatures

wid = 64

heig = 64

networks = net(wid* heig)

batch_one = torch.ones([4, 1, 64, 64], dtype=torch.float, device=torch.device("cuda"))

output_one = torch.tensor([.5, .5]).to(torch.device("cuda"))

batch_two = torch.randn([4, 1, 64, 64], dtype=torch.float, device=torch.device("cuda"))

output_two = torch.tensor([.01, 1.0]).to(torch.device("cuda"))

def train_model(nets, batch, outp):

nets.train()

optimizers = optim.SGD(nets.parameters(), 0.0001)

criterions = nn.MSELoss()

optimizers.zero_grad()

netout = nets(batch)

loss = criterions(netout, outp)

loss.backward()

optimizers.step()

return float(loss)

def batch_evaluate(nets, batch, outp, should_eval):

if should_eval:

nets.eval()

else:

nets.train()

criterion = nn.MSELoss()

netout = nets(batch)

loss = criterion(netout, outp)

return float(loss)

networks.to(torch.device("cuda"))

for i in range(60):

print("t1 loss1:", train_model(networks, batch_one, output_one))

print("t1 loss2:", train_model(networks, batch_two, output_one))

print("v1 loss1:", batch_evaluate(networks, batch_one, output_one, True))

print("v1 loss2:", batch_evaluate(networks, batch_two, output_one, True))

print("train v1 loss1:", batch_evaluate(networks, batch_one, output_one, False))

print("train v2 loss2:", batch_evaluate(networks, batch_two, output_one, False))Note: Before running the code change the running time None to GPU in Google colab. After changing that our code run perfectly.

Output:

After running the above code, we get the following output in which we can see that the model.eval() gives the incorrect loss with batchnorm.

Read: PyTorch Dataloader + Examples

PyTorch model eval required_grad

In this section, we will learn about the PyTorch model eval required_grad in python.

In PyTorch, the requires_grad is defined as a parameter. If the value of the requires_grad is true then, it requires the calculation of the gradient. And if it is false then, it does not require calculating the gradient.

Code:

In the following code, we will import the torch module from which we can calculate the gradient if the requires_grad is true.

- dropout = nn.Dropout() is used to define the probability of the neuron being deactivated.

- X = torch.ones(6, 12, requires_grad = False) is used to give the value to the x variable.

- dropout.eval() is used as eval mode.

- print(dropout(X)) is used to print the value.

import torch

import torch.nn as nn

dropout = nn.Dropout()

X = torch.ones(6, 12, requires_grad = False)

y =torch.ones(6, 12,requires_grad = True)

dropout.eval()

print(dropout(X))

print(dropout(y))Output:

In the following output, we can see that the PyTorch model eval requires_grad value is printed on the screen.

You may also like to read the following PyTorch tutorials.

- PyTorch MNIST Tutorial

- PyTorch Linear Regression

- PyTorch Hyperparameter Tuning

So, in this tutorial, we discussed the PyTorch eval model and we have also covered different examples related to its implementation. Here is the list of examples that we have covered.

- PyTorch model eval

- PyTorch model eval train

- PyTorch model evaluation

- PyTorch model eval vs train

- PyTorch model eval vs no_grad

- PyTorch model eval dropout

- PyTorch model.eval batchnorm

- PyTorch model eval requires_grad

I am Bijay Kumar, a Microsoft MVP in SharePoint. Apart from SharePoint, I started working on Python, Machine learning, and artificial intelligence for the last 5 years. During this time I got expertise in various Python libraries also like Tkinter, Pandas, NumPy, Turtle, Django, Matplotlib, Tensorflow, Scipy, Scikit-Learn, etc… for various clients in the United States, Canada, the United Kingdom, Australia, New Zealand, etc. Check out my profile.